Learning PySpark Part 1: Setting up an Environment

by James Earnshaw on March 17, 2025

It was clear on day one of my data engineer job that I needed to learn Apache Spark (hereafter referred to as Spark). It's used in every client project, typically in Azure Databricks or Microsoft Fabric. We write Spark applications in notebooks using PySpark, the Python API for Spark.

I didn't know anything about Spark but dove right in and took notes that became this series of blog posts.

Scope and intended audience

This series is aimed at first time data engineers. People who (like me) come from an on-premise business intelligence background and who are most comfortable writing lots of star schema SQL queries (think facts and dimensions). Now you've landed your first data engineer job. Your new employer accepts that you're still learning Spark but trusts you to catch up quickly.

This isn't a tutorial about how to program in Python, or about how to use the JupyterLab notebook, so I am assuming some familiarity with these tools.

The focus of this series is on the Spark SQL module, specifically the DataFrames API, as it is implemented in PySpark. Don't expect to find anything about structured streaming, machine learning, or graph processing.

Series overview

Part 1 is a little bit about Spark and how to set up an environment to practice in. Part 2 introduces the DataFrame API and Part 3 focuses on using it to transform data. At the end of Part 3 enough of the API will have been covered to give you a working knowledge of PySpark, enabling you to translate your SQL knowledge into PySpark. From here you can use the excellent API reference. Part 4 brings it all together with some real-world examples.

Spark is a big topic

I set out to "learn Spark" but soon realised I had to be more specific because Spark is a big topic. It's a compute engine capable of running workloads across clusters of machines. The most popular use case is the kind of large-scale data processing typically found in data engineering and machine learning. It has libraries for batch processing, stream processing, graph processing, and interactive SQL querying. It supports multiple languages (Python, Java, Scala, R, SQL). It's the compute engine behind cloud platforms like Databricks and Microsoft Fabric.

So as I said, a big topic. I chose to focus on PySpark, the Python API. I know SQL quite well so my goal was to match my SQL fluency. I wanted be as familiar transforming data in PySpark as I am in SQL.

Spark and PySpark

Let's get clear on the terms. When I use the term "Spark" I'm referring to the engine generally, and when I use the term "PySpark" I'm referring to the Python package.

PySpark is the Python package that is used to author Spark applications in Python. It's equally valid to call it the Python API for Spark. There are other languages too, like R, Java, Scala, and SQL. Spark is written in Scala.

What about Scala?

Even though Spark is written in Scala I get the impression that PySpark is pretty much the gold standard these days. PySpark has matured (i.e., from Spark 3.0) to the point where it's not necessary to learn Scala. Learning Scala wouldn't make sense for me anyway because our clients don't use it. They're all using Python in their notebooks.

Setting up an environment

The first thing to do is set up a local environment to practice in.

I run Spark in a Docker container on my workstation. Why Docker? Spark has a lot of dependencies and I found setting it up locally (on Windows) to be such a pain that I gave up. It was getting in the way of my main goal: to learn PySpark. Besides, in a real world data engineering job you (usually[^1]) won't be asked to set up a Spark cluster from scratch because your employer is probably using Spark through a managed cloud platform, like Databricks or Microsoft Fabric. Mostly you'll just be authoring Python code in notebooks.

[^1]: it may be necessary to create a cluster as a compute resource on whatever platform you're using. But this simple task is done using a GUI where you choose things like the size and number of compute nodes.

What is Docker?

Docker is a platform built around the concept of containerization, a form of virtualization. Containers are like virtual machines (VMs), but more lightweight. The technicalities are beyond me but I sort of get that containers are "more lightweight" because they use the host operating system whereas virtual machines each have their own operating system (on the host operating system).

Containers simplify the operational side of application development. A container is an isolated environment that includes an application's code, runtime, dependencies, and libraries. All you need is the software (Docker Desktop) installed on your local workstation to run containers. Then using Spark with Docker is just a case of running a Spark container.

Install Docker Desktop

Docker Desktop is a cross-platform tool for managing containers and it's free for personal use. Download and install it. On Windows this is straightforward but I found installing it Linux to be more involved. Either way the instructions are well documented.

The installation of Docker Desktop includes the Docker CLI client that allows you to use Docker from the command line.

Getting a Spark image

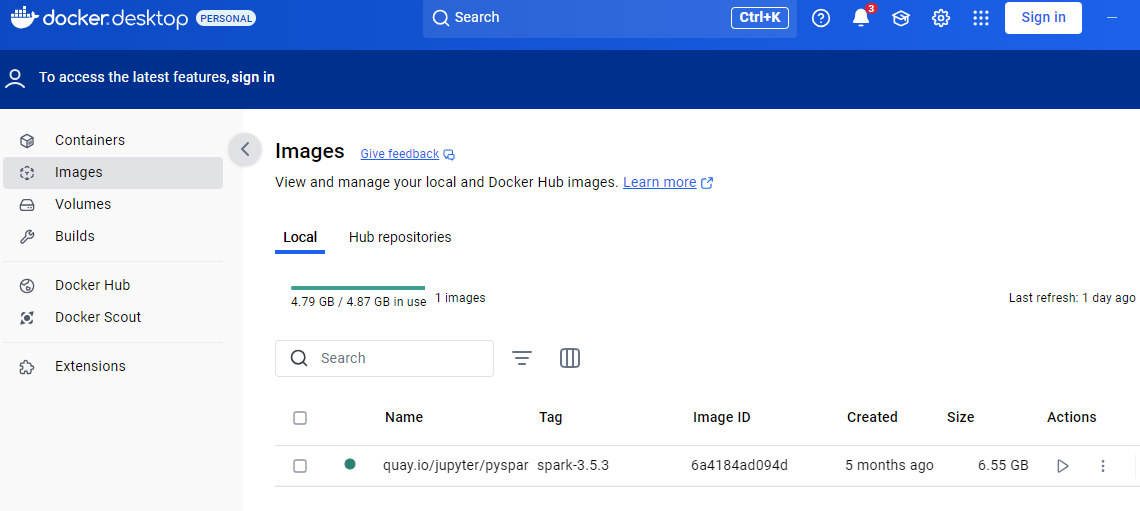

Images are read-only snapshots of a containerized environment. They include all of the dependencies and libraries required inside a container for an application to run. Because of this images can be big files. The Spark image I use is 6.65 GB.

Images are shared and distributed on registries, the main one being Docker Hub, which is like GitHub for Docker images. As such there are plenty of Spark images to choose from. I use the images published by Jupyter Docker Stacks, which are hosted on a registry called quay.io (their old images are still on Docker Hub but they're not updated).

Here's a link to the image I'll be using: pyspark-notebook. I use the one tagged spark-3.5.3, but any python-3.5.X is fine to follow along. These images make it simple to run JupyterLab, a web-based development environment that includes the popular notebook interface for authoring Python.

To get an image onto your machine you need to "pull" it with the docker pull command, or, if you want to use a GUI, you can find and download it from Docker Desktop.

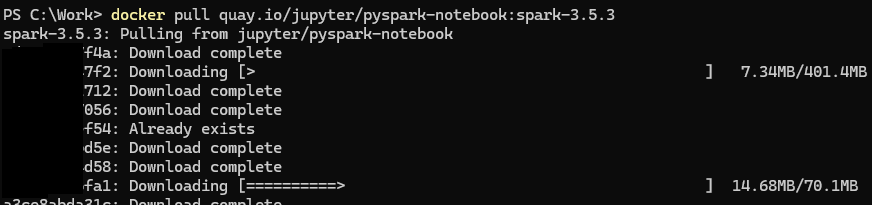

Run the following docker pull command to download the image onto your local machine:

docker pull quay.io/jupyter/pyspark-notebook:spark-3.5.3

There will be a flurry of activity in the terminal window and it will take a minute or two to complete, at which point the image will be available to use. Here it is in Docker Desktop:

There are several ways to list images using the CLI:

docker images

docker image list

docker image ls

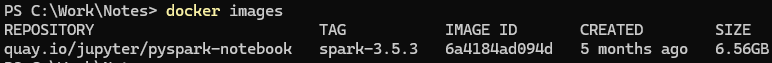

Use any to print image information to the terminal:

Now that we have an image we can use it to create a container.

Creating and running containers

Containers are created from images. Think of an image as a program and a container as a process. An image in execution is a container, just like a program in execution is a process.

Using Docker Desktop

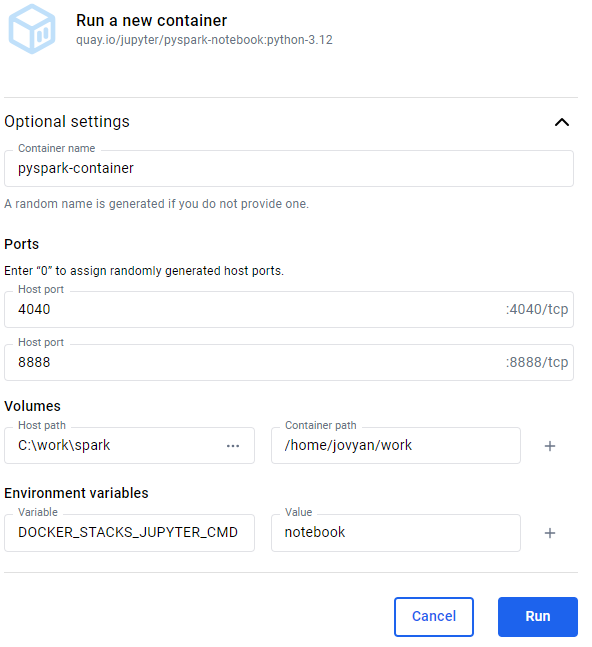

Containers can be created and run using Docker Desktop or the CLI client. In Docker Desktop go to the Images view and click the Run action on the image listing. The following settings are optional:

-

Container name: If you don't set a name Docker assigns a random one for you, like "relaxed_babbage".

-

Ports: A container is an isolated environment, and this isolation includes networking. The ports on the host (your workstation) need to be mapped to the ports on the container so that traffic from the host goes to the container. This configuration establishes that the PySpark code we enter into a Jupyter Notebook on the host machine goes to the container running the Spark engine. This task is called publishing ports. Set the host port numbers to match the container's internal ports:

4040is the default port for Spark UI (a topic beyond the scope of this series)8888is the port used to access a Jupyter Notebook from your local workstation

-

Volumes: A local path can be mapped to a path in the container. I set the host path to a folder where I keep the CSV datasets I plan to practice with (e.g.,

C:\work\spark). Set the container path to/home/jovyan/work[^2]. Notice the difference in paths. Because the container is based on a Linux image, the path is Linux path whereas the host machine, a Windows machine, uses a Windows path. -

Environment variables: These are used to customize a container's behaviour. I don't usually bother but sometimes use the

DOCKER_STACKS_JUPYTER_CMDenvironment variable to set which interactive frontend tool to use. The default value,lab, is JupyterLab. I sometimes set it tonotebookfor the minimalist experience of a Juypter Notebook without the bells and whistles of JupyterLab.

[^2] Jovyan is a term coined to refer to Jupyter users.

Here is an example:

Clicking Run both creates and runs the container.

Using the CLI

I prefer to use the Docker CLI. One reason is that you can set more options, like the working directory.

Here's an example of running a container with some options using the CLI (from PowerShell):

docker run `

--name pyspark-practice `

-it `

--rm `

-p 8888:8888 `

-p 4040:4040 `

-d `

-v "C:\work\spark:/home/jovyan/work" `

-e DOCKER_STACKS_JUPYTER_CMD=notebook `

-w "/home/jovyan/work" `

quay.io/jupyter/pyspark-notebook:python-3.12

Let's go through these options (the full list is in the documentation):

--namesets the name of the container.-itis two commands, often used together:-i(the shortform version of--interactive) and-t(the shortform version of--tty).--Interactive,-iallows shell commands to be sent the container interactively.-tty,-taccording to the docs "allocates a pseudo TTY". I've seen it explained as "it connects your terminal with stdin (standard input) and stdout (standard output)". It basically means that the output from the container be displayed in the PowerShell (or Bash) session that ran theruncommand. My understanding is that you would use this pair of options if you intend to interact with the container using shell commands.

--rmis used when you want to delete the container after you've done working. It's a clean up command, basically.-pspecifies the ports.-dis for detached. Use it to run the container in the background.-vsets the volumes. In this example we're indicating that the local directoryC:\work\sparkshould map to the container's directory/home/jovyan/work. You could also set the host volume to"${PWD}"which uses the current directory of the PowerShell (or Bash) session.-esets environment variables. This example sets theDOCKER_STACKS_JUPYTER_CMDenvironment variable which sets the frontend tool, e.g.,notebook(Jupyter Notebook).lab(JupyterLab) is the default (see the documentation).-wsets the working directory inside the container. Setting it equal to the volume path of the container is useful because means you can start reading files into Spark DataFrames without having to change directories.

I recommend the following options if you're unsure:

docker run `

--name pyspark-practice `

-p 8888:8888 `

-p 4040:4040 `

-v "C:\work\spark:/home/jovyan/work" `

-w "/home/jovyan/work" `

-d `

quay.io/jupyter/pyspark-notebook:spark-3.5.3

I don't usually delete the container (--rm) because I don't want to configure and create a new one every time. I'm happy to stop and start the same container using the GUI in Docker Desktop. I don't use the -it commands because I haven't found a use for them since my time is spent learning PySpark in a notebook, not interacting with the container through shell commands. Detached mode (-d) because I'm creating the container using the CLI and thereafter I'll stop and start it using the buttons in Docker Desktop.

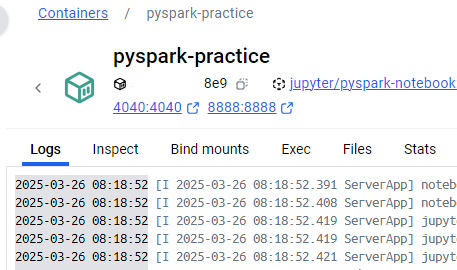

Accessing the frontend

Now that you've created a container the next step is to open the JupyterLab frontend. This is the environment in which we'll be writing PySpark programs. Check the logs of the running container for the URLs to access JupyterLab (or a different frontend if you changed the default).

The log messages we're interested in look like this:

To access the server, open this file in a browser:

file:///home/jovyan/.local/share/jupyter/runtime/jpserver-7-open.html

Or copy and paste one of these URLs:

http://<hostname>:8888/lab?token=<token>

http://127.0.0.1:8888/lab?token=<token>

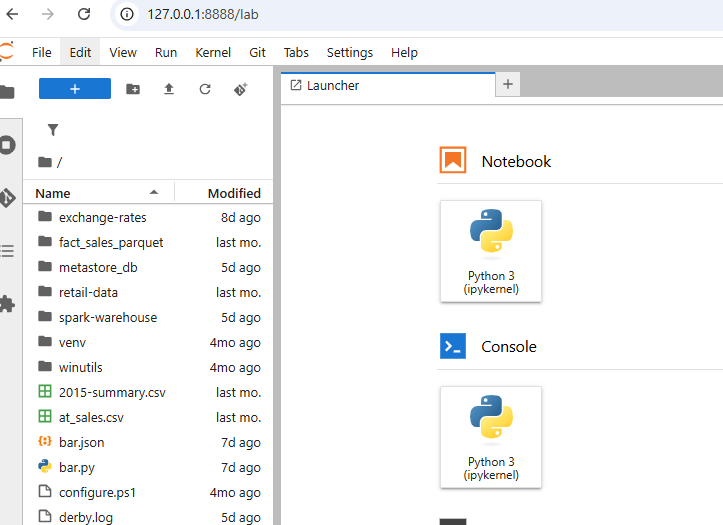

I use the second URL: http://127.0.0.1:8888/lab?token=<token>. Open this in a browser and you will be directed to JupyterLab.

Notice the directory showing the contents of the C:\work\spark folder on my workstation.

A first PySpark program

At this point we have a Spark cluster (well, your local machine) setup and an environment in which we can start learning PySpark.

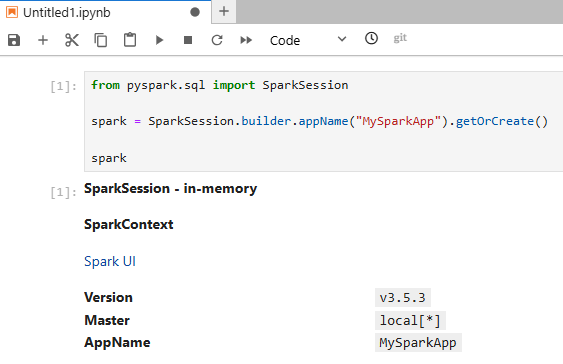

Add a notebook by clicking the Python 3 (ipykernel) button. Type the following Python code into the cell and press Shift + Enter to run it. If you don't understand the code don't worry. It's some standard boilerplate for creating Spark applications. I will explain it in part 2 of the series.

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("MySparkApp").getOrCreate()

spark

This is what you should see:

That's it for part 1.

Conclusion of part 1

The learning outcome in this series is to acquire working knowledge of PySpark, the Python API for Apache Spark. It's aimed at people with a data warehousing or business intelligence background, who write a lot of fact and dimension queries. You probably know a little bit of Python but need to quickly learn the essentials of PySpark.

In part 1 I've shown you how to get an environment setup using Docker. At this point you can go ahead and create small Spark applications to learn the PySpark API. In part 2 I will introduce the DataFrame API and in part 3 I will go into detail about how to transform data with the PySpark equivalents of the common SQL statements you're familiar with (e.g., SELECT, GROUP BY, ORDER BY, SUM, etc.)